Introduction

The first, and probably the most important, step of a data scientist is to explore and manipulate the data is about to work. Hence, the task of Exploratory Data Analysis, or EDA, is used to take insights from the data. By doing so, it enables an in depth understanding of the dataset, define or discard hypotheses and create predictive models on a solid basis.

In order to perform EDA, data manipulation techniques and multiple statistical tools exist.

The first, and probably the most important, step of a data scientist is to explore and manipulate the data is about to work. Hence, the task of Exploratory Data Analysis, or EDA, is used to take insights from the data. By doing so, it enables an in depth understanding of the dataset, define or discard hypotheses and create predictive models on a solid basis.

In order to perform EDA, data manipulation techniques and multiple statistical tools exist.

In this resource we will describe some of the most used tools for exploring and manipulating data.

Description of EDA Tools

The first step in EDA usually involves the initial data import in order to manipulate them. The library that most users will test for this task is Pandas (1).

Pandas

Pandas provides high-level data structures and functions designed to make working with data intuitive and flexible. Pandas emerged in 2008 (official release in 2010) from developer Wes McKinney to manipulate trading data. Since then it helped enable Python to be a powerful and productive data analysis environment. The primary objects used in Pandas are DataFrame, a tabular, column-oriented data structure with both row and column labels, and the Series, a one-dimensional labeled array object. It combines the array-computing ideas of NumPy with the kinds of data manipulation capabilities found in spreadsheets and relational databases, such as SQL. It also provides convenient indexing functionality (enabling reshaping, slicing and dicing, aggregations and selecting of data subsets).

In order to manipulate data in deeper way, data scientists will use specialized libraries; like NumPy (2) and SciPy (3).

NumPy

NumPy, short for Numerical Python, has been the pillar of numerical computing in Python. It provides data structures and algorithms need for most scientific applications involving numerical data. NumPy contains, among other things: a fast and efficient multidimensional array object, performing element-wise computations with arrays or mathematical operations between arrays, linear algebra operations, Fourier transform, and random number generation, a mature C API to enable Python extensions.

One of the primary uses of NumPy in data analysis is as a container for data to be passed between

algorithms and libraries. For numerical data, NumPy arrays are more efficient for storing and manipulating than the other built Python data structures. Thus, many numerical tools for Python either assume NumPy arrays as a primary data structure or else target interoperability with NumPy.

SciPy

SciPy on the other hand; is a collection of packages addressing a number of foundational problems in scientific computing. It contains various modules like numerical integration routines and differential equation solvers, linear algebra routines and matrix decompositions (extending beyond NumPy), signal processing tools, sparse matrices and sparse linear system solvers, standard continuous and discrete probability distribution, various statistical tests and descriptive statistics.

Together, NumPy and SciPy form a reasonably complete and mature computational foundation for many traditional scientific computing applications.

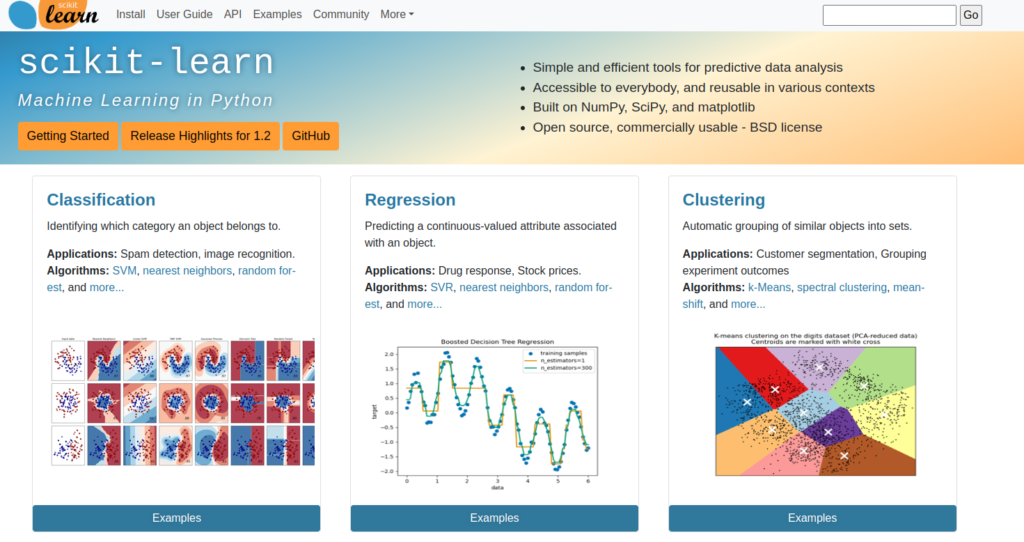

Scikit-Learn

Since its creation in 2007, scikit-learn has become the foremost general-purpose machine learning toolkit for Python programmers (4). It includes submodules for almost all models like:

- Classification: SVM, nearest neighbors, random forest, logistic regression, etc.

- Regression: Lasso, ridge regression, etc.

- Clustering: k-means, spectral clustering, etc.

- Dimensionality reduction: PCA, feature selection, matrix factorization, etc.

- Model selection: Grid search, cross-validation, metrics

- Preprocessing: Feature extraction, normalization

Along with Pandas, scikit-learn has been critical for enabling Python to be a productive data science programming language.