Quick introduction to Deep Learning

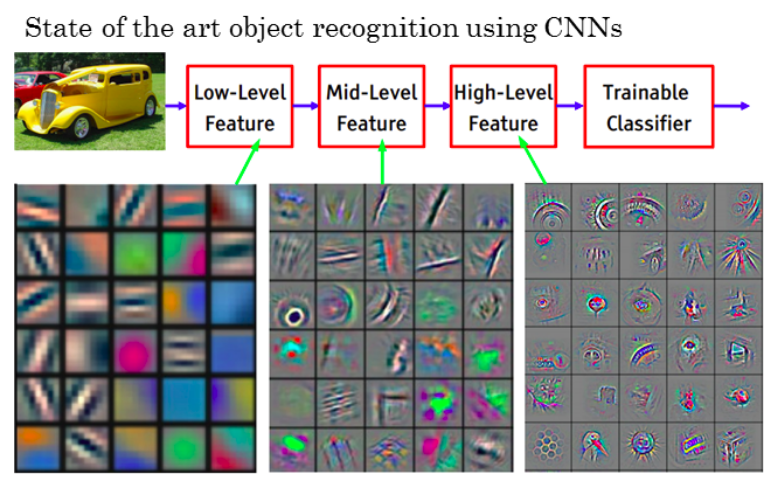

Deep learning is part of the family of artificial intelligence methods and more particularly of machine learning methods. It uses different layers to extract features. The first layer takes as input the raw data (image, text, sound…) the following layer takes as input the output of the previous one. This structure models the data with different levels of abstraction (see Figure 1) starting from a low level on the first layers to high level on the last layers.

Models can be supervised (with human annotation as ground truth) or unsupervised (without human annotation).

Two main appoaches exist, discriminative and generative models. Discriminative models are used for classification (a.k.a. which class is associated to the input data? it is a qualitative prediction) or regression (a.k.a. what is the probability of association of a characteristic to an input data? it is a quantitative prediction), while generative models are used to generate new data (for example the models of the GPT family which chatGPT is a part of). This family of generative models has been trained to predict the next word from a list of words. It can therefore generate a new text which look likes the training data without conforming to what these data contains.

Framework For Deep Learning Models

Many frameworks exist to create deep learning models. We will only quickly detail PyTorch and TensorFlow, but we could also have taken a look at Keras, MXNet, Sonnet…

PyTorch

PyTorch (2) is an open source python library based on Torch developed by Meta from the research work of Ronan Collobert from IDIAP (research institute located in Martigny, Switzerland).

PyTorch creates Tensor objects for working on homogeneous multidimensional arrays of numbers. These arrays represent the input data, the parameters of the different layers of the model and the outputs produced.

PyTorch models are built in four general steps:

- Create the neural network base on the model tensors. The structure can be dynamically adapted during the training step.

- Make a prediction from an input data that would pass through the different layers of the network.

- Calculate the loss function between the prediction made and the ground truth serving as a reference.

- Performed back-propagation to modify the tensors of the layers to reduce the loss function and therefore improve predictions.

Calculus are optimized and can be performed either on CPU or on GPUs supporting CUDA. This last solution allows a very strong increase of the learning speed. Many manuals and tutorials are available to find examples and the developer community continues to grow. PyTorch has a python interface to work directly on tensors without going through a higher level library. This integration allows to use Python debugging tools which facilitates the search for errors and the development of new models

TensorFlow

TensorFlow is an open source library developed by Google (3). It offers a frontend API in python to create models which will then be executed in C++.

Development is done by creating dataflow graphs. Theses graph are structures that describe a series of processing nodes. A node takes a tensor as input, performs a mathematical operation on it, and produces a new tensor for the next node. Model learning uses the same process as PyTorch with the reduction of a cost function via a back-propagation process. Although the use of python makes it easy to create high-level abstractions and the links between them, the operations are carried out by high-performance C++ binaries. This makes code debugging more difficult and implementation more complex.

Models can be run on local machine, cloud cluster, iOS or Android smartphones via CPU and GPU. Google offers to use its TensorFlow Processing Unit (TPU) chips which are optimized for faster learning.

TensorFlow has a very large community, very rich documentation and many tutorials are available. It is well suited for use in a production environment